I have recently used two of the ‘modern’ approaches to application development:

a) writing my code against an API framework

b) specifying my entities and some behavior, then pressing a button on a code generator

For a non-trivial application, I have to say that I prefer having a robust API framework coupled with various tool helpers over the code generators.

My two recent use cases – both are complex development environments (IDEs).

One was written using the Microsoft Visual Studio Extensibility (VSX) universe. This is a rich and barely documented API into the framework that is used to create Visual Studio itself. This has a steep learning curve, but we (the developers of our tool) ended up learning what we needed. We were sometimes frustrated because an API would fall into Microsoft proprietary code, but generally it behaved “as we expected”.

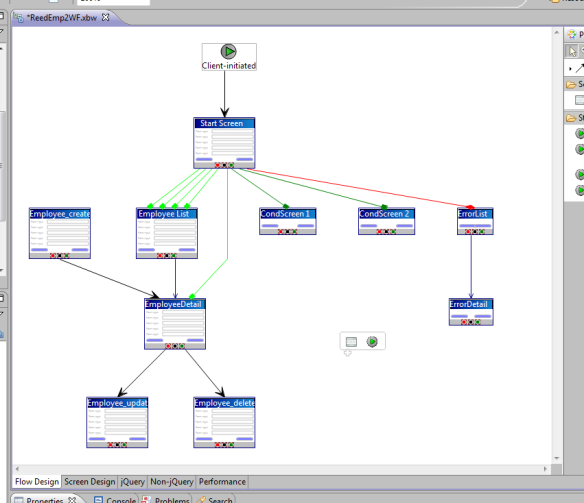

The other product is also a complex programming environment, but based on Eclipse. The original team chose to use the “Eclipse Modeling Framework” (EMF) with its code generators. The development workflow was to define our persistent objects and their relationships in an Eclipse tool. We then had the EMF generate the class definitions, factory objects, and relationships as Java.

It’s interesting that similar to Visual Studio internals, the open source Eclipse also has poor documentation. However, while the Visual Studio newsgroups were pretty helpful, the Eclipse (and Java) newsgroups are often condescending and parochial.

The EMF (and its graphical sibling ‘GMF’ which we also used) got an initial designer up and running lickety split. It does have a lot of benefits for simple and demonstration projects. However… we are tasked with adding lots of detailed functionality, behaviors, and even product branding.

This is when the fun started…

With a code generator approach, there are is a METRIC TON of code generated, and a lot of duplicated and almost-duplicated code. That’s OK for toys, but when we need to start adding behavior that cannot be expressed in the Eclipse EMF designer, well, we ended up adding a lot more duplicated code (like a cross-cutting aspect – well – not fun…). This was compounded by the imperfect RE-generation process.

By re-generation – consider the use case where you change the basic EMF model – usually adding classes and properties. Then you trigger the code regeneration process.

I have to give the Eclipse guys credit, they do a pretty good job of keeping our other changes to the Java files in place. However, the oodles of files in the code-generation process means we need to both check the generated code in many places, and copy/paste our customizations into all the new files.

As an example – when we added a user visible type (like a new control for the tool palette – it’s really a type with manifestations like a tool pallet properties, instantiated type property sheet, etc).

In the API Framework approach – it is a manual process where we create (derive) the classes for the the new type then add the class specifics into a table. The framework reads the table and the new type (or control) appears on the tool palette and is hooked into the system. You then customize (the real objective of adding the type).

Writing down the procedure and the files and expectations are pretty straight forward and the other developers had no problem doing this.

In the Eclipse EMF code generation approach – we edit the model in the Eclipse tool (which is saved to an XML file). In the modeling tool we define the new type (control), its properties, its relationship to the other types – IOW everything you would expect in a modeling tool – and all is good here – this is what I want.

The difficulty comes in the Java code re-generation phase. The changes from the EMF model both creates new files (like for the new types) and various other files have various other changes merged into them.

The generation and merge process is pleasing in that it works as well as it does, but there are still quite a few files that need to be verified/changed/examined. This particularly tweaked me because a new version of Eclipse has the generator using a slightly different placement of braces and indenting. Hence running a directory-wide code compare is basically useless (even a flexible tool like ‘Beyond Compare’ flags a change when next-line braces changed to the same-line flavor…), to say nothing about the generator now using an underscore a little bit more often than before…

In short, when creating a non-trivial application I would rather have a steeper learning curve than having to deal with on-going pain.

You must be logged in to post a comment.